When Life Gives You Lemons, Make Amazing Mograph How Yes Captain motion studio saved NodeFest 2025’s opening sequence.

Project Workflow and Outcome

NodeFest organizers Yes Captain had three weeks to save the event’s opening sequence when the studio tasked with the job backed out at the last minute. They focused on leaning into the constraints of making it look like previz/animatics, mainly using Cinema 4D’s viewport renderer and a minimal color palette to set the vibe. They quickly generated 3D models using Sparc3D with varying results and used DaVinci Resolve and Fusion for editing and motion graphics. Despite their struggles, Yes Captain created something that NodeFest attendees embraced.

Paradoxically, we’ve been told to expect the unexpected, as things don’t always go as planned. When NodeFest organizers Yes Captain learned that the studio tasked with creating the opening sequence for NodeFest 2025 backed out at the last minute, they came together for an epic save.

Since 2015, Australian studio Yes Captain has been creating motion graphics projects for clients like Specsavers, University of Melbourne, and Luna Park. All their experience came in handy when they took on the challenge of creating NodeFest 2025’s opening title sequence in three weeks, while simultaneously organizing the entire event.

We spoke with James Cowen from Yes Captain about how he and his team leaned into constraint to come up with a creative solution that put motion design at the forefront.

Tell us about Yes Captain and the type of work that you do.

Cowen: Yes Captain has been a motion studio since 2015, but since we started NodeFest the motion work has taken a little bit of a backseat. We've spent much of the last few years learning web and software development. One of our biggest projects has been NodePro, a talent platform to promote Australian and New Zealand motion designers, and the sister site to NodeFest. Giving the local industry a voice has been far more rewarding than any motion project.

How did you become involved in organizing NodeFest?

Cowen: Back in 2016 we really wanted to attend design and motion events like Blend, F5, NAB, and OFFF, but they were so far away. There was nothing like them in Australia or New Zealand, so NodeFest was born. It started small with 170 attendees, quickly growing to over 500 in 2019. Now the audience won't let us stop calling it the "Christmas party" of the motion industry.

Tell us about what happened and how you planned for the unexpected job of creating the opening sequence.

Cowen: The original studio booked to do the titles suddenly backed out three weeks before the event, so my small studio, Yes Captain, had to do them ourselves while simultaneously organizing everything for NodeFest.

Once faced with having to fill in, we broke down what was needed and what could be achieved in three weeks. We hit on the idea of making everything look like previz/animatics—something we knew the motion design audience would recognize. It was almost like an in-joke. But it's amazing how much design you can bring to Cinema 4D's viewport render.

It was a fun, new challenge to make it look a certain way and follow a certain aesthetic and color palette when it's not typically designed for such.

Take us through the creation process using Cinema 4D and other tools.

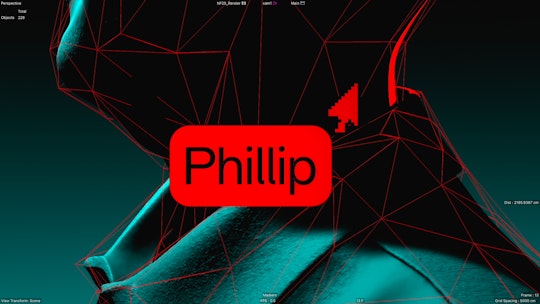

Cowen: We took a very iterative approach. We leaned into the time restriction and decided to use Cinema 4D’s viewport renderer for all shots, keeping everything looking like lo-fi wireframe animatic style renders. We kept the colour palette minimal and on brand with the festival colours. We even changed the viewport settings in Cinema 4D to match this (and now we can't switch back). This strategy allowed us to move fast.

“It's amazing how much you can get out of Cinema 4D's viewport renderer!”– James Cowen

We edited in DaVinci Resolve and composited in Fusion. We were able to experiment with ideas and shot types very quickly, bouncing new OpenGL renders to DaVinci Resolve to find what worked. It's amazing how much you can get out of Cinema 4D's viewport renderer!

What was your experience using AI tools to help put the project together?

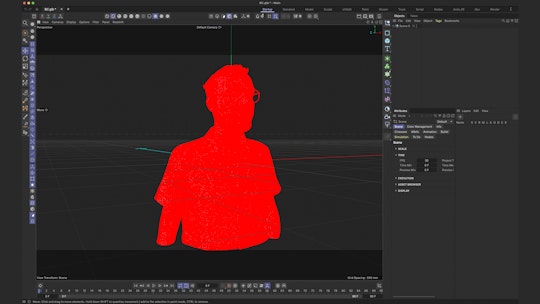

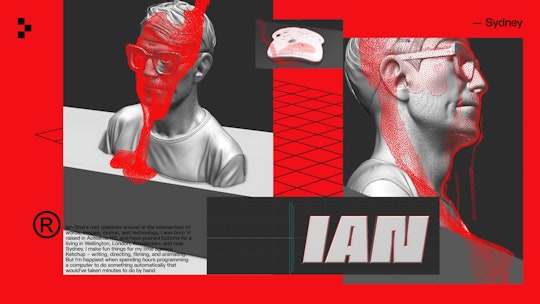

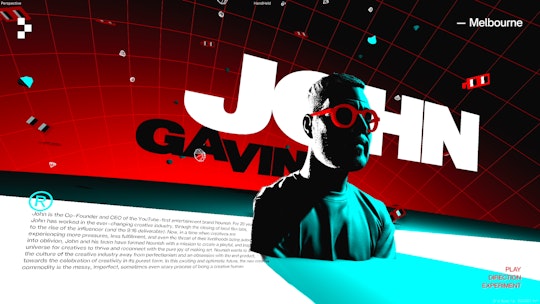

Cowen: Our titles concept was to show 3D models of each presenter’s face for the titles. To do this, we turned to Sparc3D, a free AI-powered 3D model generation tool. This enabled us to create 3D models of each presenter's face from a single photo as we simply would not have been able to model them from scratch in the limited time frame and without a budget. The tool only accepts one photo, and the results are mixed—nothing beats an artist creating an accurate model. Sometimes we got incredible detail, sometimes completely different glasses.

Each keynote speaker provided headshots that we used in traditional digital marketing for the event, so we used the same image to upload to Sparc3D. Sometimes there was a long wait with many people ahead in the queue as the software is free. Don't close the browser!

Eventually we got a 3D model in GLB format. We took this into Cinema 4D. It was too heavy, as you can see in the wireframes (yes, our wireframe color was red to suit the look!). So, we poly reduced the model to make it more manageable and to be able to better show the contents.

Some models looked better than others and weren't always an accurate representation of the speaker, so high-contrast lighting, wireframe overlays, and cloner FX were used to stylize each model. They were enough to get the job done, and we leaned into their stylized form.

What were the challenges you faced and how were you able to overcome them?

Cowen: The biggest challenge was coming up with enough unique sequences for each presenter without it seeming stale with such a limited palette. The fast, iterative nature of working with previz was the key to overcoming this.

How was this project received by NodeFest attendees?

Cowen: The response was really positive, especially with the audience knowing we had very limited time to create the piece after the original studio booked pulled out late.

What did you learn throughout this process?

Cowen: The big thing we learned was how to use DaVinci Resolve and Fusion. We'd never used Fusion before. We had switched to DaVinci Resolve a year ago for editing and loved it. So, this was the perfect time to try Fusion. Ultimately, we found it to be a bit more suited to individual VFX shots than motion graphics. There are always quirks when learning something new, but it was fast and a lot more stable than what we previously used.

What advice would you give to studios or artists that find themselves in a similar last-minute predicament?

Cowen: Create and embrace restrictions and limits!

Nadia Yangin is a copywriter at Maxon.